Changing the News at The New York Times

As the news media environment distribution shifted away from print to almost exclusively digital content, the task of creation, curation and correction of information also shifted away from professional journalists to the broader media industry as well as the general public. Unfortunately, this trend also opened the opportunity for more errors to be made, and later removed or edited without a trace.

The ability to update content breaks the information flow process: news stories no longer represent a snapshot in time, but instead living, mutating organisms.

It also enables the creation and spread of misinformation. The extent of this constant updating is not yet known or widely researched, despite it being a common practice in the current media environment.

So here’s where this study comes in! In this study, I conduct a textual analysis of changes being made on the New York Times website utilizing two tools: the diffengine and NewsDiffs. This study not only sheds light on this vital, yet largely unknown complication in the process of information dissemination within the greater media network, it also proves a sample approach of how to track and analyze these changes.

The “diffengine”, self-described as a “utility for watching RSS feeds to see when story content changes” (Github). As far as I understand, it works by tracking RSS feeds and comparing them with the corresponding data in the Internet Archive. Ii is set up to find content that was changed on specific websites, take a picture of the changes and post them on twitter pages. Though many of these accounts have recently been suspended by Twitter, the ones still active as of today (9/10/2018) are: The Guardian, The Washington Post, The Wall Street Journal, The White House Blog, and Breitbart.

The diffengine creators state on Github, that “the hope is that it can help draw attention to the way news is being shaped on the web”.

Also a part of my analysis are NewsDiffs, Diffengine’s precursor, which was created at the Knight Mozilla MIT hackathon in June 2012.

This study focused on the changes made on the New York Times website. This media source was chosen because it is one of the oldest and most established news institutions in the US today.

In total, sample size for this analysis was 113 instances of changes being made to online content (100 images from the NYT diffengine Twitter page and 13 in-depth NewsDiff case studies).

Through the analysis, I have found that the content changes fall into three broad themes:

“Developing Story” (33 from diffengine, 5 from NewsDiffs; 34% of total sample),

“Change in Tone” (25 from diffengine, 6 from NewsDiffs; 27% of total sample)

“Correcting Inaccuracy” (42 from diffengine, 2 from NewsDiffs; 29% of total sample).

The following are discussions of these themes with illustrative, yet not exhaustive examples of each.

Theme 1: “Developing Story”

“Developing story” is the theme of non-linearity: online news articles no longer adhere to chronological order. With print newspapers, you could trace the development of any one story through different newspaper clippings. Now with digitization of the news, any one article is no longer chronological and thus it is increasingly difficult to determine how a story unfolds. As new details come in, the article can be updated – providing both “new”, relevant and the most up to date content. Otherwise, the journalists would have to produce a new article every time new information came in, which is probably seen as a waste of time and resources. For example, one featured story from NewsDiffs states: “Gunman Massacres 20 Children at School in Connecticut; 28 Dead, Including Killer (NYT). This tragic story is about a school shooting in Connecticut, originally published on December 14, 2012 at 12:09 pm. NewsDiffs tracked its subsequent 19 version changes, clearly updated as the story developed (see Figure 1). From the titles of the changed posts below, we see how the story went from “shooting reported” to more detailed “multiple fatalities reported” to more data-driven “18” then “20 children killed”. On December 15 the death of the killer was added to the title, as well as the change of – “kills” to the harsher “massacres”.

Figure 1 “Gunman Massacres 20 Children at School in Connecticut; 28 Dead, Including Killer” http://www.newsdiffs.org/article-history/www.nytimes.com/2012/12/15/nyregion/shooting-reported-at-connecticut-elementary-school.html

From looking at the evolving content of the article itself, we see an example of a vital change made to the story. The original article read: “The principal had buzzed Mr. Lanza in because she recognized him as the son of a colleague”. This text was removed, replaced with: “Although reports at the time indicated that the principal of the school let Mr. Lanza in because she recognized him as the son of a colleague, he shot his way in, defeating a security system requiring visitors to be buzzed in”.

The earlier version implied that the principal had let the shooter into the school, before she herself was shot. Later it was explained that earlier reports of the principal’s actions were not accurate, and in fact the shooter had forced his way into the school despite a security system in place. This is problematic, as the false accusation of the deceased principal’s key role in the letting the shooter into the school, essentially aiding in the “massacre” was released into the Internet. Because it is not common practice for the general public to continuously check back to online articles for updates and corrections, it is impossible to say how far the misinformation about her actions spread through social media and word-of-mouth, and whether people to this day people still believe the original reports. Since misinformation spreads faster and wider than the truth (Vosoughi, Roy, & Aral, 2018), any attempts at corrections or fact-checking fall short of overriding the original error. This is a tragic example of how putting out unverified information as a story develops can lead to the spread of misinformation and have lasting effects on people’s lives and reputations.

Theme 2: “Change in Tone”

The theme “change in tone” focuses on framing: the ability of the media gatekeepers to focus on a specific aspect on an issue and ignore any other perspectives. In essence, framing theory suggests that how something is “framed” or presented to the reader influences how people process that information. It relies on heuristic shortcuts to make something meaningless into something meaningful for the reader (Goffman, 1974). A great illustrative example for this theme is the article “Yvonne Brill, a Pioneering Rocket Scientist, Dies at 88”. This is a case of bad writing that created an outrage on social media when it was first published (Sorkin, 2013). Yvonne Brill, a rocket scientist who happened to be female, was described in her obituary as being a talented cook – “she made a mean beef stroganoff” and “followed her husband from job to job and took eight years off work to raise three children”.

http://www.newsdiffs.org/diff/192021/192137/www.nytimes.com/2013/03/31/science/space/yvonne-brill-rocket-scientist-dies-at-88.html

Only in the second paragraph was it mentioned that she was “also a brilliant rocket scientist”. The NYT pretty quickly (according to the NewsDiffs it was about five hours later) removed the offending statement about the beef and put the “was a brilliant rocket scientist” statement first. This is an interesting case, because the change of content was obvious – the original sparked outrage and that was the cause of the change to be made. It was also not a factual error, unverified information or exaggerated findings – it was a sexist comment that the author made and later had to remove. Subsequently, many other popular media articles, tweets and blog posts were written about this case (Brainard, 2013)., and the surprising thing was that the beef comment, though sexist, was not actually the worst of the piece. The more offending statement is the one still on the NYT site – “followed her husband from job to job and took eight years off work to raise three children”. Many stood up and protested that this was a long-standing problem of journalists write profiles of women scientists, mostly exploring the mystery of how they could actually be both women and scientists, and the need to reassure readers about the well-being of their children and married life (Brainard, 2013). This offending statement, though, has not been removed from the site to this day.

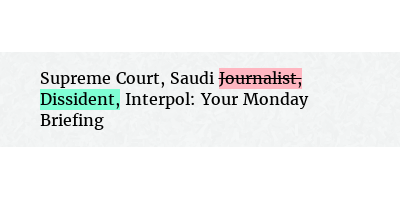

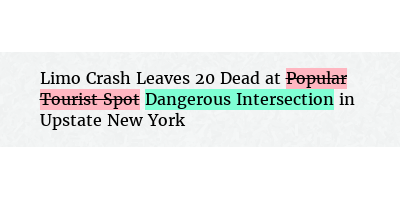

The changing content to reframe the tone theme is also seen in the diffengine examples below: “journalist” is changed to “dissident” and “popular tourist spot” is changed to “dangerous intersection”.

Though further research is needed to access the causes of these types of changes, it seems logical that they are made based on external pressures. For example, the “journalist” to “dissident” could have been a result of changing political pressures and the “popular tourist spot” to “dangerous intersection” could be a response to NYC not wanting to scare away tourists from a popular destination based on financial profit concerns.

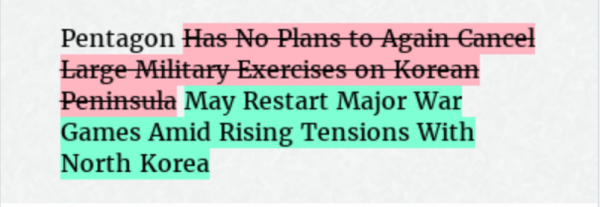

As a final example, the change in headline from “Pentagon has no plans to again cancel large military exercises” to “Pentagon may restart major war games amid rising tensions” is not only a very harsh reframing of the story, but also fear-mongering about a possible war with North Korea. It is plausible to assume that due to having a high level of media trust, a sensational headline from a trusted gatekeeper like the NYT might affect a reader’s perception of reality.

Especially since headlines serve not only to attract attention to a story, summarize and “hook” a reader, but also to “influence the interpretation of a story” (Marquez, 1980; Freimuth, 1984). Thus, it is important to not only be aware that this live editing and reframe is happening, but further research needs to look into what external pressures are influencing the news and why. Especially since objectivity are one of the core practices of the field of journalism (Lewis, 2012). If any external pressures are influencing news coverage in a way that they are forced to reframe stories against norms of objectivity, this is a major change in how news functions in the US and requires serious attention.

Theme 3: Correcting Inaccuracy

The “correcting inaccuracy” theme is one of error correction and fact-checking. This theme is aligned with the traditional roles of journalists as fact-checkers, as well as producers and curators of information (Lewin, 1951; Shoemaker & Vos, 2009). There were some examples of simple grammatical or spelling error correction: “Weider” to “Weirder” or “Chicago” to “Chicago’s”, but these were minimal.

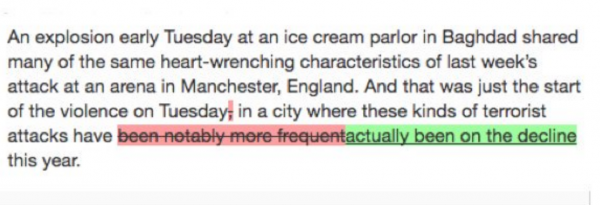

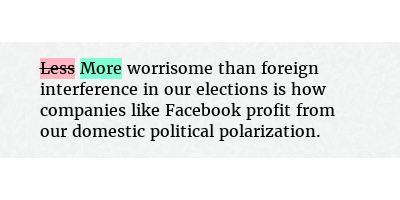

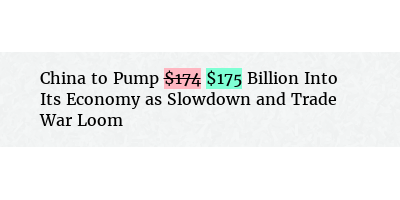

The changes I was more interested in were factual inaccuracies. While the other themes might indirectly cause confusion in the minds of the public, publishing inaccurate information directly creates and then spreads misinformation. In the sample, these changes included inaccurately reported statistics (e.g. from “174” to “175” million dollars) and – even more worrisome – completely false stories (“Terrorist attacks have been notably more frequent” to “Actually been on the decline”), or “Less worrisome” changed to “More worrisome” in the headline “More worrisome than foreign interference in our elections is how companies like Facebook profit from our domestic political polarization”.

Though fact-checking and corrections should be a necessary feature of all professional written content, they should take place prior to publication because information online can spread easily, and then be difficult, if not impossible, to correct. The ability to publish and correct later stems from this deeper issue: the heightened speed of production that came along with the Internet age forces journalists to produce multiple stories a day and leaves minimal time for close reading of the research or fact checking their sources (Usher, 2014). This opened up the opportunity for errors to be made. Thus, as facts are checked, changes are made live to content to make it accurate, but often changing the entire meaning of the text.

Conclusion

In our current media environment, changes to online content are able to be made without any traces or explanations of what was removed and what was added. Because this phenomenon is not yet commonly known or widely researched, despite evidence of it commonly happening in newsrooms today (Usher, 2014), this study focused developed a typology of the changes being made to online content on the New York Times website. I identified three themes: “developing story” in which content is updated as new information about an ongoing breaking news event is released, “change in tone” in which stories are reframed as a result of possible external pressures and “correcting inaccuracy” in which journalists correct factual errors previously published.

The importance of this study lies in the fact that all three types of updating enable the creation and spread of misinformation, which spreads much faster and wider than the truth (Vosoughi, Roy, & Aral, 2018), has lasting, negative effects on people’s lives (Niederdeppe & Levy, 2007), and is difficult to eliminate, even after being debunked (Hochschild & Einstein, 2015). Because the consequences of misinformation are potentially so impactful on both an individual and societal scale, more work needs to be done to measure its extent and methods of spread throughout media networks. This typology informs future network analysis research and it also proves a sample approach of how to track and analyze these changes. Though not perfect, utilizing tools such as the diffengine and NewsDiffs gives unique insight into what kind of content is being changed. With this conceptualization and operationalization in place we now better design studies of misinformation diffusion within media networks, especially ones that require automated machine learning algorithms. By identifying this typology, I hope to shed light on this vital, yet largely unknown phenomenon of mutating media content, as well as provide additional context to future research tracing the spread of information and misinformation in the current media environment.